Research

I am currently working on developing reinforcement learning methods. Also, I have been working on research for spoken dialogue systems.

Reinforcement Learning

Efficient method for estimating the influence of experiences on RL agent's performance (2023 ~ 2025, NEC and AIST)

-

Takuya Hiraoka, Guanquan Wang, Takashi Onishi, Yoshimasa Tsuruoka.

Which Experiences Are Influential for RL Agents? Efficiently Estimating The Influence of Experiences

Reinforcement Learning Conference (RLC) / Journal (RLJ), 2025

poster, slides, source code, demo video 1, demo video 2, arXiv, RLJ version

Simple but Efficient Reinforcement Learning Method for Sparse-Reward Goal-Conditioned tasks (2023, NEC and AIST)

-

Takuya Hiraoka.

Efficient Sparse-Reward Goal-Conditioned Reinforcement Learning with a High Replay Ratio and Regularization

arXiv:2312.05787, 2023

source code

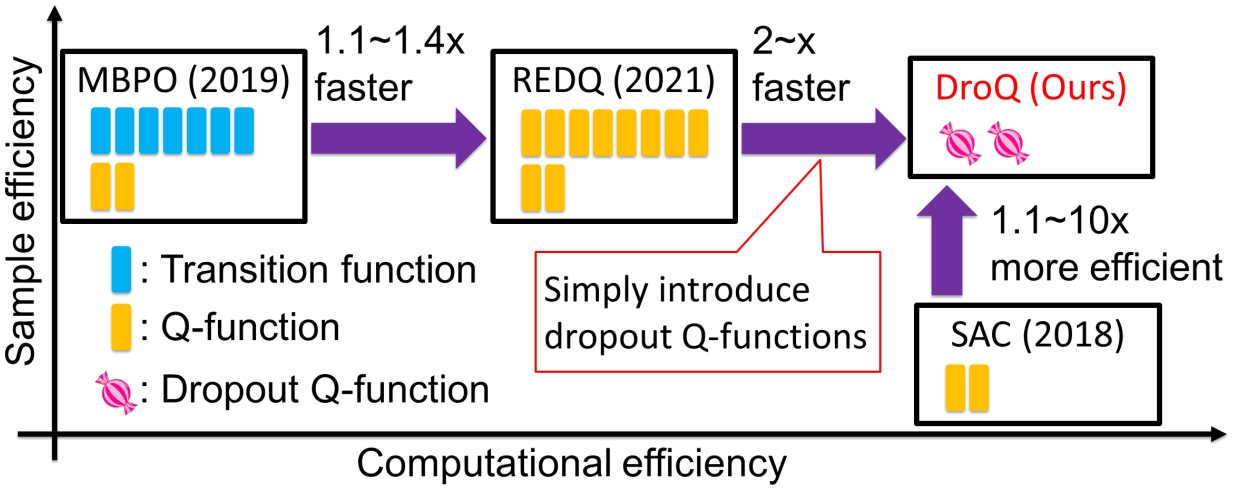

Very Simple but Doubly Efficient Reinforcement Learning Method (2021, NEC and AIST)

-

Takuya Hiraoka, Takahisa Imagawa, Taisei Hashimoto, Takashi Onishi, Yoshimasa Tsuruoka.

Dropout Q-Functions for Doubly Efficient Reinforcement Learning

The Tenth International Conference on Learning Representations (ICLR), April, 2022

poster, slide, arXiv version, source code

Model Based Meta Reinforcement Learning (2020, NEC, AIST and RIKEN)

-

Takuya Hiraoka, Takahisa Imagawa, Voot Tangkaratt, Takayuki Osa, Takashi Onishi, Yoshimasa Tsuruoka.

Meta-Model-Based Meta-Policy Optimization

The 13th Asian Conference on Machine Learning (ACML), November, 2021

poster, video presentation, demo video, arXiv version, source code

Robust Hierarchical Reinforcement Learning (2019, NEC and AIST)

-

Takuya Hiraoka, Takahisa Imagawa, Tatsuya Mori, Takashi Onishi, Yoshimasa Tsuruoka.

Learning Robust Options by Conditional Value at Risk Optimization

Thirty-third Conference on Neural Information Processing System (NeurIPS), December 2019

Dialogue Systems

Inquiry Dialogue System (2018, NEC)

-

Takuya Hiraoka, Shota Motoura, Kunihiko Sadamasa,

Detecticon: A Prototype Inquiry Dialog System,

9th International Workshop on Spoken Dialog Systems (IWSDS). May 2018

Persuasive Dialogue System (~2016, NAIST)

-

Takuya Hiraoka, Graham Neubig, Sakriani Sakti, Tomoki Toda, Satoshi Nakamura,

Reinforcement Learning of Cooperative Persuasive Dialogue Policies using Framing,

The 25th International Conference on Computational Linguistics (COLING), August 2014

-

Takuya Hiraoka, Yuki Yamauchi, Graham Neubig, Sakriani Sakti, Tomoki Toda, Satoshi Nakamura,

Dialogue Management for Leading the Conversation in Persuasive Dialogue Systems,

Automatic Speech Recognition and Understanding Workshop (ASRU), December 2013

Example-based Dialogue System (2016, NAIST)

- Takuya Hiraoka, Graham Neubig, Koichiro Yoshino, Tomoki Toda, Satoshi Nakamura, Active Learning for Example-based Dialog Systems , 7th International Workshop on Spoken Dialog Systems (IWSDS). January 2016, Source code

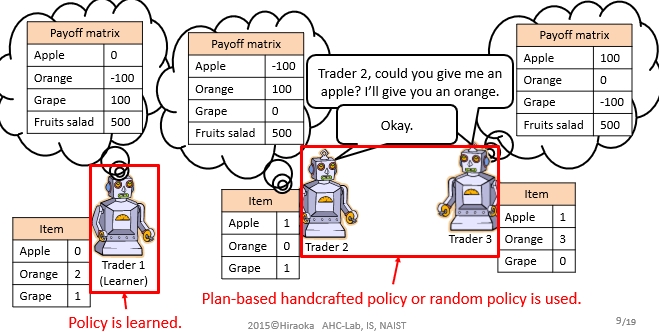

Negotiation Dialogue System (2015, NAIST and USC)

-

Takuya Hiraoka, Kallirroi Georgila, Elnaz Nouri, David Traum, Satoshi Nakamura,

Reinforcement Learning in Multi-Party Trading Dialog , The 16th Annual SIGdial Meeting on Discourse and Dialogue (SIGDIAL). September 2015, Source code, video recordings and slides